Even though large news sites can produce high quality content regularly, if the site doesn’t fix outstanding technical SEO issues, website navigation and poor user experience (UX) can cause low search results visibility due to the different algorithms that analyze and measure multiple website and content elements to determine relevance for ranking.

This case study outlines what Benson SEO accomplished for a client as a result of a comprehensive SEO audit conducted. The audit identified priority Technical SEO action items which were integrated into a detailed project plan and implemented over several months.

While the site publishes free, high quality, and timely content for a specific industry, the purpose of the content is to get readers to pay for a subscription for even more industry-specific useful and unique news and information not published anywhere else.

After sharing and discussing the audit results with the client, these technical SEO issues were prioritized for fixing:

- Duplicate site versions – erroneous coding in the template footer spawned duplicate URLs that were indexed in search results

- http – https Migration – going from non-secure to secure had not yet been planned and implemented

- 404 Error Page Creation & Redirect Cleanup – there was a user-unfriendly 404 page that did not encourage visitors to move further into the site, as well as improperly implemented redirects that hindered crawling by search engine bots

- Pagination, Robots.txt, & XML Sitemap – pagination had not been put in place, the robots.txt file did not reflect the site’s current state and XML sitemaps were incomplete

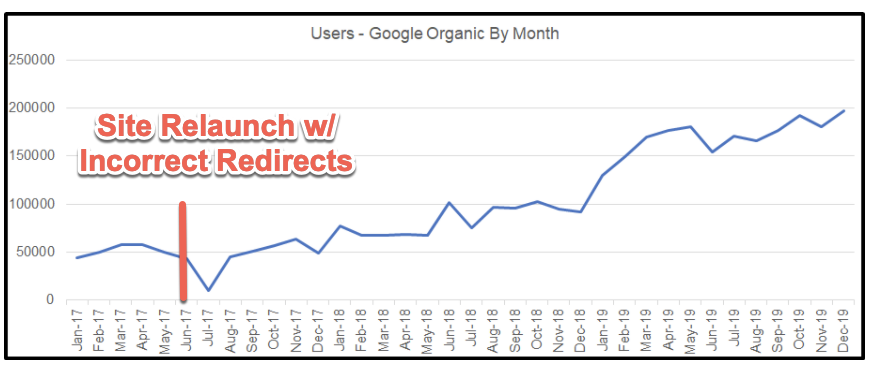

NOTE: Before we present below what we found and fixed, the site was migrated to Drupal prior to us coming on board as the SEO support organization.

- Once the site was moved, indexing fell to almost zero due to a lack of properly implemented redirects. As a result, the site lost almost all traffic for approximately two weeks after the relaunch

- While the exact reason was never clear, it was most likely due to the web development team controlling the design/relaunch without support from a search engine optimization entity to provide a migration/relaunch checklist which would have caught the issues we write about in this case study

With all that being said, let’s look at the issues we discovered and what we did to correct them.

Technical SEO Problem – Duplicate Site Versions

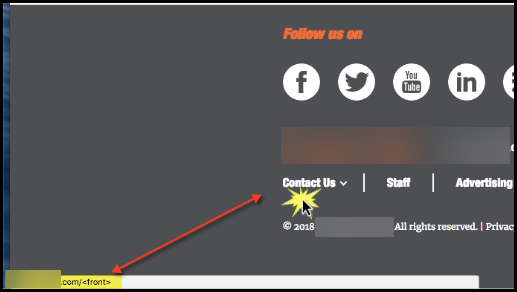

One of the biggest problems was duplicate site versions being indexed in Google search

During the audit, we discovered erroneous coding in the Contact Us and several other footer link drop-down features. Users and bots could click or follow these links, which creates an entire duplicate website, where URLs feature “<front>” at the end of the page path, and since this follows through to paginated sections of the site, it compounded the issue very quickly.

In addition, the site’s development platform was linked from several news articles, which allowed search engine crawlers to index URLs from the development site, which created another duplicate content issue. Since duplicate sites cause Google bots problems in deciding which version to crawl and index, finding and getting these out of the index helped the important content get discovered, crawled and indexed.

Migrating From HTTP To HTTPS

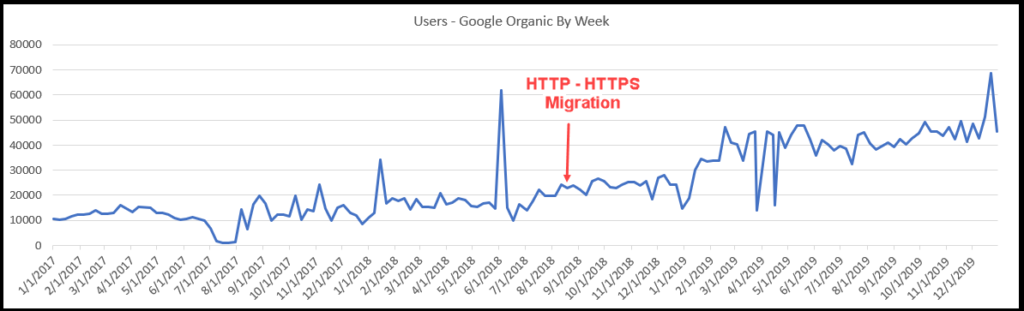

During our audit, we also noted the site had not yet been migrated to HTTPs from HTTP, and since Google announced back in 2014 that HTTPS is a lightweight ranking signal and Chrome users were also seeing warnings about non secure sites, we knew this could reduce user confidence in navigating the site.

Since by this time going from HTTP to HTTPS was a well-documented process, we used our checklist and core SEO process to avoid common transition issues including:

- Mixed HTTP/HTTPS content being crawled and indexed

- Improper 3XX redirects – 301 vs 302, 307, etc.

- URL paths not updated from HTTP to HTTPs

- Updating HTML/XML sitemaps

- Updating both Google Analytics and Google Search Console with the HTTPs site version

Because converting to HTTPS helps with minor ranking signals, user confidence and shows Google that the site is keeping up with recommendations to enhance the user experience, we felt this was low hanging fruit that would continue to help move the site up for search visibility.

404 Error Page Creation & Redirect Cleanup

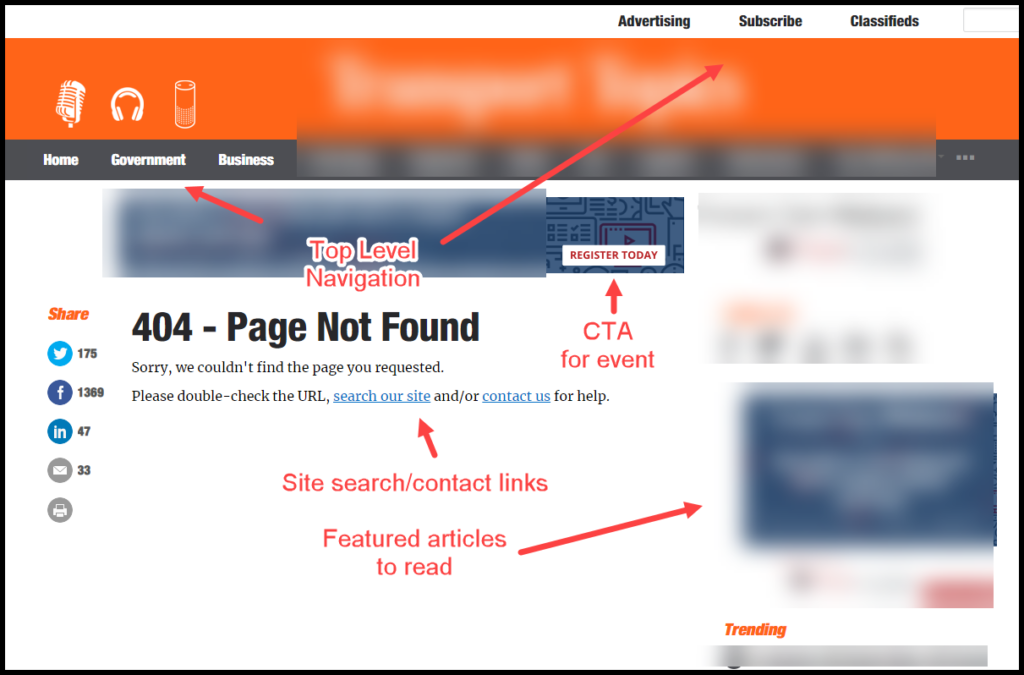

404 errors happen to every site; it’s how the error code is handled that can reduce the number of users leaving the site and helping search engine crawlers move forward to the pages that are live on the web server. For this site, users and bots were being 302 redirected to a search page that offered only the ability to do a site search. For any web visitor, this could be a frustrating experience that would cause them to leave the site entirely. We recommended creating a custom 404 error page with text links to improve usability, along with adding a CTA button above the fold as well as more navigation options to encourage continued browsing and searching for content. The new 404 page shown below has:

- Top level navigation in place

- CTA at top

- Small selection of articles in right hand nav side to choose from

Redirect Cleanup

We found two problems with redirects that hindered overall site crawlability and indexing:

- Old URLs ending in the .asp file extension sent users to a blank 404 page

- Legacy articles were redirected using a temporary 302 redirect, not the permanent 301 status

We helped the web dev team re-write updated rules for .htaccess file to make all existing redirects 301 with only one hop.

Pagination

Even though Google is now lukewarm at best regarding the need for pagination, it doesn’t hurt or cause crawling/indexing issues, but back when we found the problem, pagination was still a recommended best practice. We recommended putting pagination in place for top level categories of different article topics as well as multiple page sections to increase the chances of more category pages showing up higher in search results. In addition, we discovered canonicalization was missing, so that was added to this task.

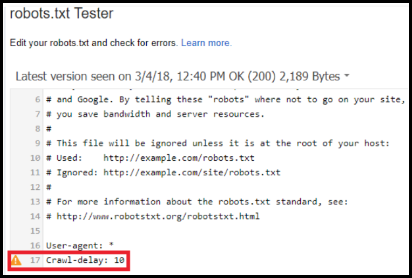

Robots.txt File

We also felt that cleaning up the robots.txt file would help search engine crawlers find the most important content if it were properly configured. During our audit we discovered that:

- XML sitemaps were not referenced

- Crawl delay error

XML Sitemaps

The XML sitemaps were fairly clean. The site has one for news articles only, and there is a main XML sitemap for all other pages. The news sitemap had no errors, but the main XML sitemap had query-based URLs. We worked with the web development team to remove all URLs with query strings.

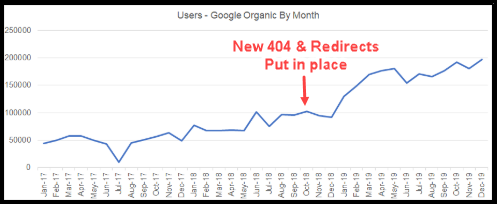

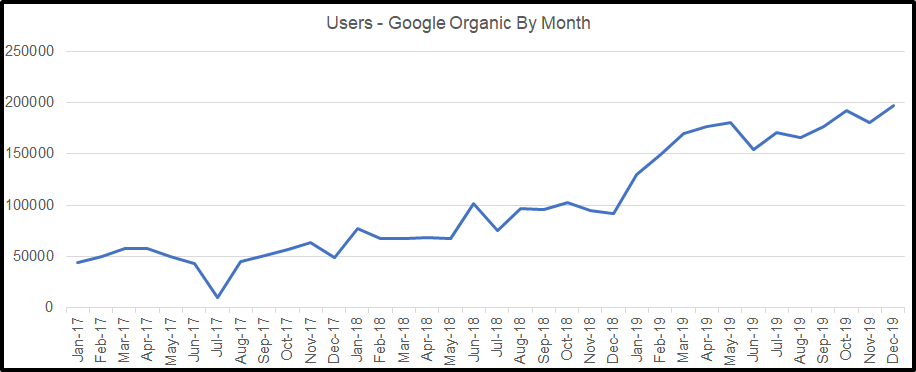

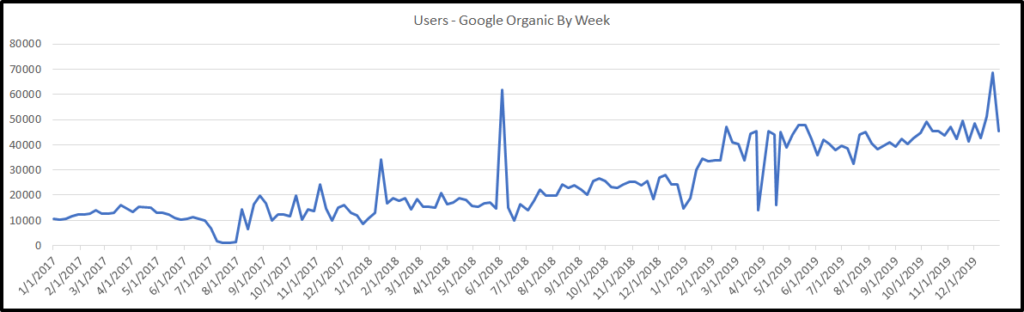

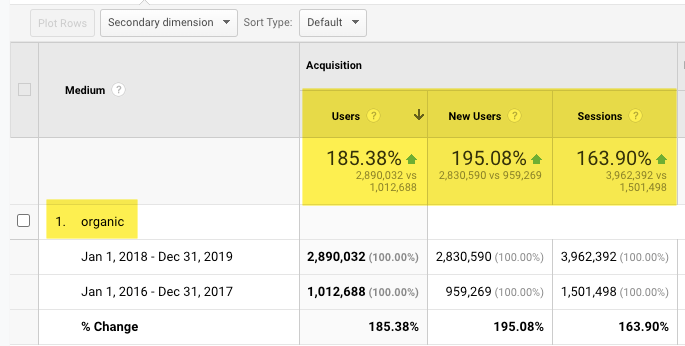

As shown below in both monthly and weekly format, the changes made to improve the site’s technical SEO paid off with steady incremental progress to show up higher in search visibility results.

Once the technical issues were resolved, we then turned our attention to the classic, on-page SEO.

Ongoing Optimization

Our audit also found multiple opportunities to fix URLs with these on page issues:

- Lack of focused keyword research and topics of interest

- Multiple URLs with too long or too short titles

- Multiple URLs with too long, short or missing meta descriptions

- Missing alt text

- Internal linking opportunities

Since the client was open to fixing the issues above, we created and delivered a custom SEO training for writers and editors to use on-page optimization as part of writer’s work flow to provide search engine crawlers better context and topic relevance for Google search queries

Over several months, these on-page fixes also helped move the needle for many news articles to rise higher in search results, but also more generic targeting for evergreen news categories queries.

Conclusion & Results:

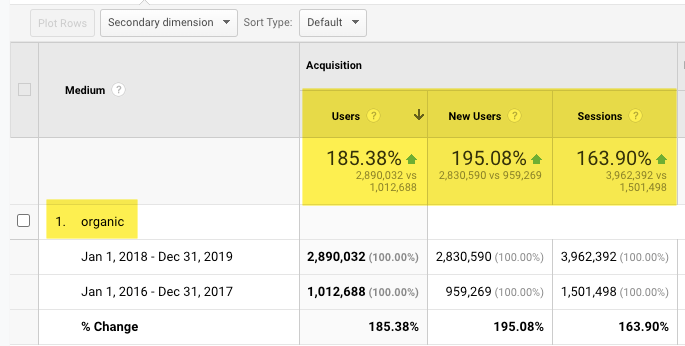

Over a two year period, significant progress was made in search engine results and visibility across the site. Overall Organic traffic grew 185% in the two years we worked on the site vs. the previous two years when the site wasn’t optimized. What we found for this site, much like other clients we’ve helped, we again discovered:

- It’s not enough to create great content that’s published regularly

- Site technical issues hinder better search results visibility

- However, once technical issues are fixed and the site is “crawler-friendly,” going back to the basics of classic on-page SEO continues to move pages up in search results

Contact Benson SEO to set up a technical site audit and we can chat about the results you can expect to see.

Leave a Reply